AI has undoubtedly benefited multiple sectors of the economy by bringing automation and advancements in the field. But the surge of misuse must be addressed too. Cybercriminals are using the concept of voice cloning which leads to impersonating voice and fooling you into exploiting your money or data.

Table of Contents

ToggleIf you receive a call saying you won a car in the lottery, you’ll disconnect the call. But what if it’s your colleagues or manager asking you to share confidential information about the company over the phone? You’re most likely to fall into the trap.

“AI will be the best or worst thing ever for humanity.” – Elon Musk, CEO of Tesla.

What is Voice Cloning? How Does AI Clone Voice Work?

Voice cloning is the process of utilizing machine learning algorithms and artificial intelligence models to create a realistic voice like a human. The machine-generated sound is very close to the original voice of a person and realistic enough to be believed by anyone.

The process of creating an AI cloning attack to impersonate a human voice involves several steps. Here’s how they do it:

Step 1: Data Collection

A hacker’s first requirement is to collect an individual’s original voice. They can easily obtain the required information from social media, voice recordings, videos, etc.

Step 2: Processing the Data

Once they’ve got enough samples, the collected sound is broken into individual sound waves to be analyzed and worked by AI. This data is then used to train the AI model.

Book a Free Demo Call with Our People Security Expert

Enter your details

Step 3: AI Voice Cloning

Once trained thoroughly, AI is supposed to create a voice, imitating the voice and practicing new phrases.

Step 4: Scamming

Once the last step is successful, scammers use the AI to misguide their target and cause threats to confidential information.

Also read: Social Engineering Attacks: Techniques and Prevention

Subscribe to Our Newsletter On Linkedin

Sign up to Stay Tuned with the Latest Cyber Security News and Updates

Effects of Voice Cloning on Business

After the pandemic, companies have widely adopted a work-from-home culture and continue to operate on it. Due to this, managers and employees heavily depend upon technological solutions like online meetings and voice calls to connect.

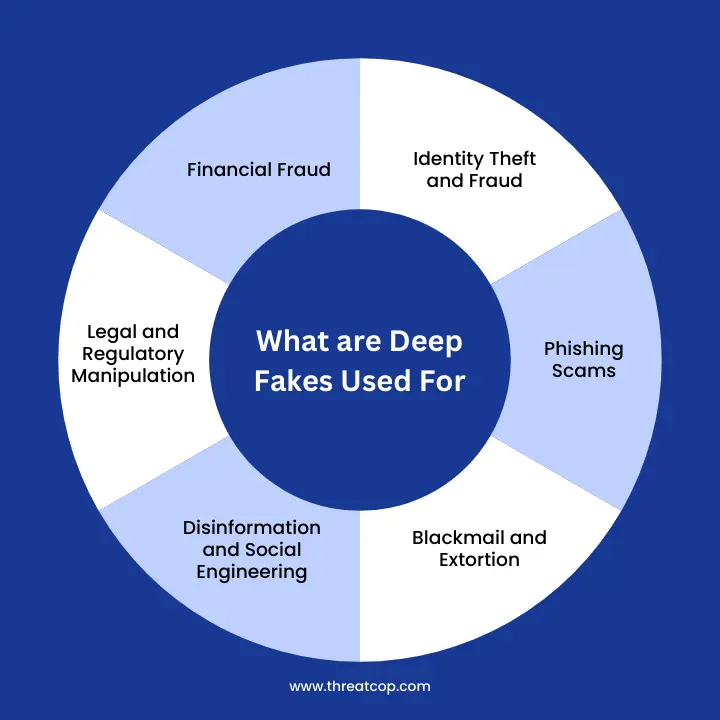

Deepfake makes it challenging to distinguish between genuine or fraudulent activity. Businesses can suffer financial loss, theft of confidential information, poor organization reputation, etc when they become victims of cybercrime.

Signs to Identify and Avoid Falling for Clone Voice AI

Don’t Trust Calls/Messages from Unknown Numbers

Never share any personal information over a phone call, especially if the number is unknown. In case of urgent matters, verify the authenticity of the matter before sharing any information.

Keep an Eye on Unnatural Voice

Clone voice AI has minor differences from the original voice, which can be identified if paid attention. If there’s an unnatural pause or robotic tone in the speech, initiate the benefit of the doubt and get alert.

Create Strict Protocols

Establish strict protocols for sharing confidential information in an organization. This protocol or standard should be followed to ensure the proper handling of sensitive information.

Multi-Factor Authentication

MFA successfully adds a layer of protection to your sensitive information. Even if cyber criminals use clone voice AI to share confidential data, they’ll still need verification from your end to do so.

Regular Updates

Just as cybersecurity threats are evolving rapidly, organizations’ security and data breach policies need to be regularly audited. Any possible loophole must be fixed immediately to avoid data loss.

Privacy Control

Avoid unnecessary data sharing and check your permissions regarding voice AI devices. When installing a new app or software, confirm the list of permissions that must be given to third-party apps.

Read a Brief Guide on Vishing Attack

Looking Forward to a More Suitable Solution to Such Attacks

With advancements in AI technology, hackers get creative to misuse it and take social engineering to the next level. Businesses, thus, need to stay alert and train their employees against such fraudulent activities.

It’s impossible to monitor each employee’s activities, and thus, they become the weakest part of the scenario. Through Threatcop’s security awareness training known as TSAT organizations can keep their employees ahead of threat actors.

What sets TSAT apart is its commitment to creating a people security culture within organizations. It’s not just about temporary awareness; it’s about instilling a lasting, vigilant mindset among employees. By transforming workforces into an informed, aware, and proactive defense line, TSAT significantly reduces the risk of falling victim to AI voice cloning attacks and other sophisticated cyber threats.

All in all, it’s critical to note that spams like these are framed during a continuous time framework. Which implies there’s a high chance of receiving spam calls, messages, and mail at regular intervals. Look for such signs by being alert and successfully mitigating the risk hovering over your prestige organization.

Technical Content Writer at Threatcop

Ritu Yadav is a seasoned Technical Content Writer at Threatcop, leveraging her extensive experience as a former journalist with leading media organizations. Her expertise bridges the worlds of in-depth research on cybersecurity, delivering informative and engaging content.

Technical Content Writer at Threatcop Ritu Yadav is a seasoned Technical Content Writer at Threatcop, leveraging her extensive experience as a former journalist with leading media organizations. Her expertise bridges the worlds of in-depth research on cybersecurity, delivering informative and engaging content.